Benchmark The Risk Profile of Your AI Coding Posture

Developers have fully embraced AI coding tools, and they are not planning to let go. They ship more, debug faster, and automate the tedious parts of the job, and that's a good thing.

However, when you gain speed, it’s easy to lose control.

The AI Governance Gap

Research from DORA and McKinsey backs up that over 90% of developers now use AI tools at work. Yet, most organizations still don’t have clear AI-use policies and controls in place.

AI coding assistants aren’t like other productivity tools. They generate and modify source code, touch production systems, and can leak sensitive data or pull unverified dependencies.

It’s a new attack surface hiding inside your development workflow, so a clear governance plan and security controls aren't optional.

That’s why we created the AI Coding Risk Assessment.

A New Benchmark for AI Coding Risk

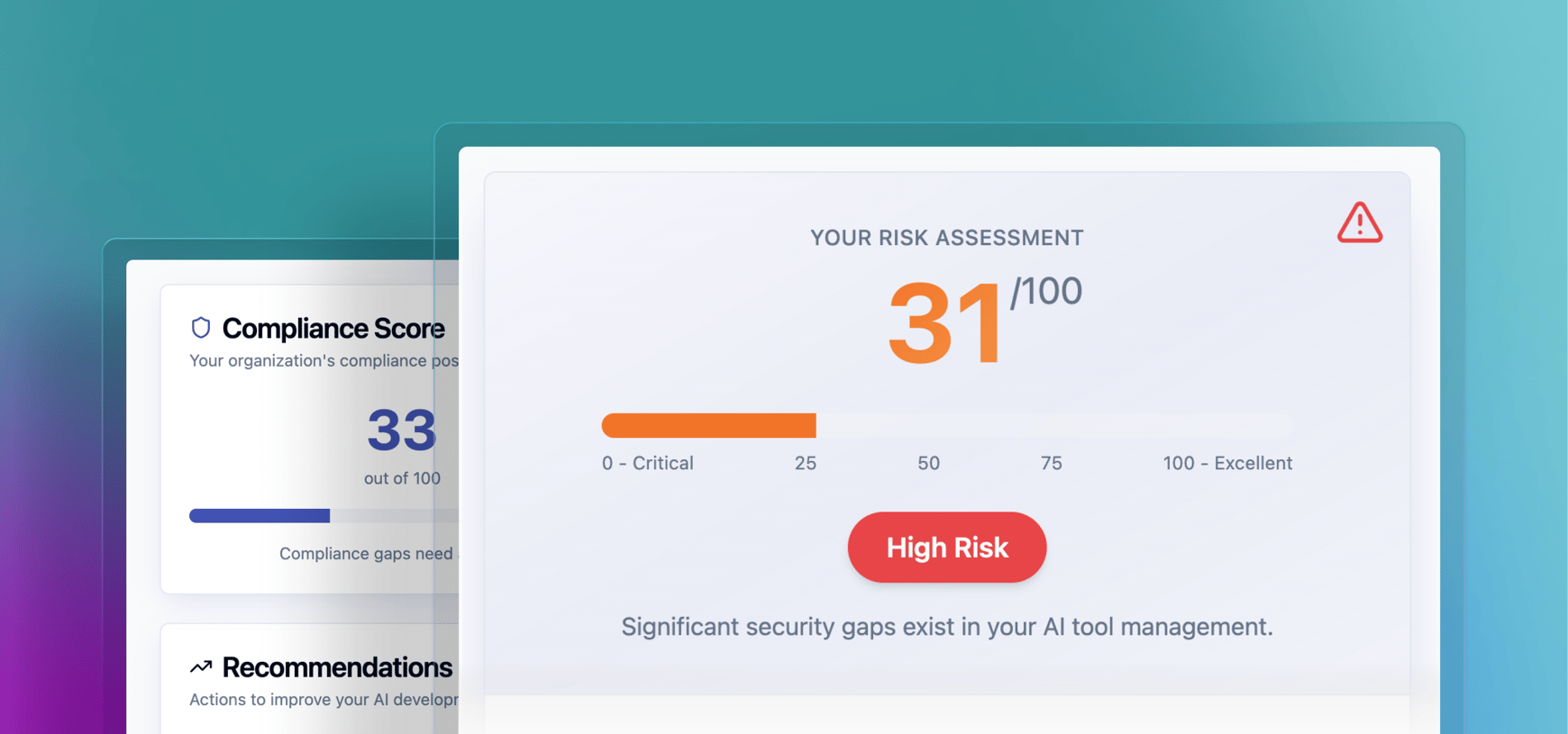

The AI Coding Risk Assessment is a 24-question survey designed for security-minded engineering leaders. It measures the security and compliance posture of your organization’s AI-assisted development workflows, and gives you three practical takeaways:

- A 0–100 score that reveals the strength of your AI coding security posture

- A live benchmark that show how you compare to other businesses in the industry

- A custom checklist with recommended actions and trusted resources to close the gaps

The free assessment builds on guidance from OWASP’s LLM and GenAI Incident Response Guides, DORA’s research, and Ray Eitel-Porter’s “Governing the Machine”, among other resources that capture the first generation of AI governance and security best practices.

Take the Free Assessment

AI-assisted coding is here for good. The teams that succeed will be the ones building the right guardrails and culture around it.

Take the AI Coding Risk Assessment below, see your benchmark, and implement custom recommended practices to move toward safer, more compliant AI-assisted development.

How does your organization stack up?

.svg)