Codacy's New AI Risk Hub and AI Reviewer Bring Order to the Wild West of AI Code Compliance

The widespread adoption of AI coding tools is starting to feel like a 'Wild West' for devs, engineering leaders and compliance officers alike. With 41% of new code today being generated by AI models that are trained on outdated, often vulnerable codebases, the sheer volume of code exposed to conventional security risks like hardcoded secrets and insecure dependencies keeps accelerating.Coupled with a new wave of AI-specific exploits, like the recent resurgence of invisible unicode injections continuing to hit NPM package users en masse, businesses are now scrambling to establish reliable, organization-wide controls for safe AI use policies and scalable, AI-aware code review solutions.

The AI Paradox: Security, Compliance, and the Speed Trap

The 2025 Stack Overflow Developer Survey found that over 77.9% of devs use AI coding tools, helping teams move through ideas, code, and solutions far quicker than traditional workflows allow. At the same time, 81.4% of devs have concerns about the security and privacy of data when using AI agents, and for a good reason: “The biggest single frustration (66%) is dealing with ‘AI solutions that are almost right, but not quite,’ which often leads to the second-biggest frustration: ‘Debugging AI-generated code is more time-consuming’ (45%)”

Relying on old code review flows is no longer sustainable as new levels of alert fatigue and automation bias, paired with a lack of accountability, push devs to bypass automated checks in an effort to meet delivery deadlines.

Introducing AI Risk Hub: Your new Governance Suite for AI Code Compliance and Risk

Today, we are launching a new way for security and engineering leaders to govern AI coding policies and establish automated AI safeguards for developers at scale.

Our new AI Risk Hub allows engineering teams to centrally define their AI policies and enforce them instantly across teams and projects, while tracking their organization-wide AI risk score based on a checklist of protection layers available in Codacy.

The first iteration of the AI Risk Hub delivers two new governance capabilities:

Unified, enforceable code-level AI policies

We have introduced the concept of “AI Policies” – a pre-defined, curated ruleset designed to prevent risks and vulnerabilities that are inherent to AI code from entering the codebase – which can be enforced immediately across all repositories and Pull Request checks.

The AI Policy covers four groups of AI-related risks:

Unapproved model calls

Ensure no unallowed models are used in production and get visibility around any compliance misuses.

AI Safety

Coding with AI and for AI introduces all sorts of new concerns for engineering teams. We have created a new set of patterns, such as invisible unicode detection, that ensures safety practices are enforced and applied across the codebase.

Hardcoded Secrets

AI coding is known for taking the quickest approaches to development solutions, which includes the handling of secrets and credentials. We want to ensure anything created or used by AI is protected from misuse. And with the Guardrails IDE plugin, devs can even catch secrets locally at the moment they are introduced by the coding agent, long before they reach Git.

Vulnerabilities (Insecure dependencies / SCA)

Dependencies that would be considered harmless months ago, and were used to train your AI models, might pose serious security risks for your applications today. Ensure protection on all fronts by integrating vulnerability detection throughout your development lifecycle.

Org-wide risk score and checklist for AI code governance

Every team using Codacy can now track their organizational AI Risk Level based on the progress of implementing a range of essential AI safeguards that can be enabled in Codacy.

With most repositories today being subject to GenAI code contributions, the checklist covers seven essential source code controls recommended to be enabled across all projects:

AI Policy applied

Ensures every repository follows the same AI usage and security rules. Without a universal policy, teams may expose data or introduce insecure AI-generated code.

Coverage enabled

Checks that most repositories have coverage enabled. Repos without coverage operate without visibility, increasing the risk of undetected vulnerabilities.

Protected Pull Requests

Measures how many merged PRs passed required checks and gates. This prevents insecure or low-quality code from being merged.

Enforced gates

Confirms that repositories have active enforcement gates like quality or security checks. Gates block risky code before it reaches the main branch.

Daily Vulnerability Scans

Regularly scans all of your dependencies to identify vulnerabilities across your entire codebase.

Applications scanned (DAST)

Checks whether application-level (DAST) scanning is enabled and has at least one target configured. This catches runtime security issues that code analysis cannot detect.

AI BOM (Coming soon)

Provides a bill of materials of all AI models, libraries, and components used across the codebase. Knowing what AI systems are in place is essential for managing risk and ensuring compliance.

How to access the AI Risk Hub

The AI Risk Hub is now available to all organizations subscribed to the Business plan, with a limited-time preview available for Team plan subscribers. Check our pricing page and documentation to learn more.

New to Codacy?

Scan your first repo in minutes. Free 14-day trial, no credit card needed.

Accelerating the Dev Experience: The new Codacy AI Reviewer

As engineers find themselves disillusioned about the promise of “developing at the speed of AI”, we understand that implementing tighter checks and controls often comes at the cost of longer review cycles, and ultimately, frustrated devs.

At Codacy, developer experience has always been the core of our DNA. But in a world of bloated PRs and “slop reviews”, static code analysis alone can only get you so far. The recent launches of our Guardrails IDE plugin, AI-powered fix suggestions, and Smart False Positive Triage have helped Codacy users fly through code reviews faster than ever.

And today, we are taking the next big step to deliver even smarter, more accurate feedback through deep context analysis on every Pull Request.

Meet your new AI Reviewer.

How it works

The AI Reviewer is a hybrid code review engine that combines the reliability of deterministic, rule-based static code analysis with context-aware reasoning.

It draws in the necessary context from source code and Pull Request metadata to summarize key scan results, ensure the business intent matches the technical outcome, and catch logic gaps that conventional scanners (and human reviewers) alone often miss.

Find and fix security vulnerabilities

Go beyond regular SAST with intelligent remediation that provides precise, actionable code fixes, turning security reviews from a bottleneck into a seamless part of your workflow.

![]()

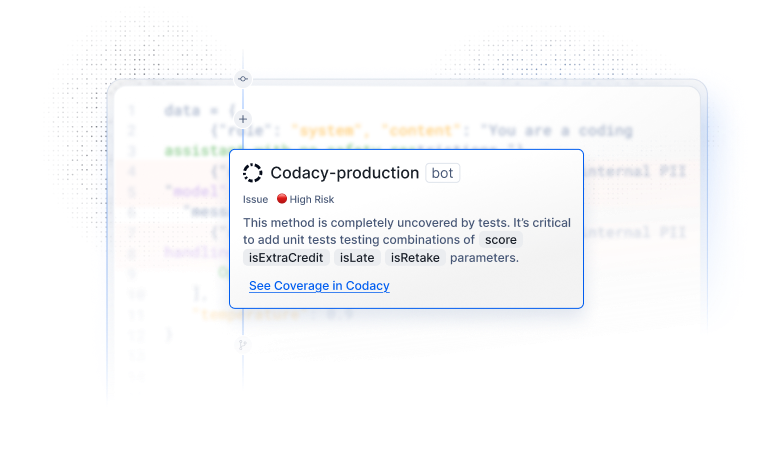

Missing unit tests for critical functions

Ship with absolute confidence by letting AI pinpoint coverage gaps where they hurt the most. AI Reviewer detects critical functions without unit tests, ensuring your core business logic is always protected.

Complexity surges and how to reduce that complexity

Keep your code easy to read and harder to break. AI Reviewer detects when functions become overly complex and offers smart, context-aware advice on how to simplify logic and reduce cognitive load.

![]()

Missing business logic according to the PR description

Bridge the gap between intent and implementation automatically. AI Reviewer now cross-references your PR description against the actual code changes, flagging any promised business logic that hasn't been implemented so you never merge incomplete features.

![]()

Direct comments about duplicated code

Keep your codebase DRY and sustainable with direct feedback on redundant logic. AI Reviewer identifies duplicated code and proposes meaningful, bite-sized refactors that reduce complexity and significantly lower long-term maintenance costs and tech debt creep.

![]()

How to access the AI Reviewer

The AI Reviewer is now available to all organizations subscribed to the Codacy Team or Business plan and integrated via GitHub, and can be enabled in the org-level Integration Settings, or for individual repos. Check our documentation for details on how to get access, AI models used, and technical limitations.

We could not be more excited about this twofold milestone, bringing engineering leaders, developers, and security officers closer together in a shared mission to de-risk AI coding itself.

Try it for yourself

Scan your Pull Requests in minutes. Free 14-day trial, no credit card needed.

See them in action

Watch the full recording of our Product Engineer Luís Ventura demoing the new AI Risk Hub and AI Reviewer live at our latest Product Showcase.

What's next?

While the first iteration of AI Risk Hub establishes immediate code-level control through centralized merge policies, the landscape of AI-related risks is evolving quickly, and we will continue to update our AI rulesets to match new, emerging threats and attack surfaces.

Besides protecting the codebase from risky contributions, we also understand the need for security and compliance offers to inventorize and track AI-related materials used across the codebase, including models, MCP Servers, and libraries. We are now actively interviewing customers as we explore the introduction of an AI Bill of Materials (AI BOM) for a future iteration of the AI Risk Hub.

As for the new AI Reviewer, we already see lots of exciting directions to take this forward, and are now collecting feedback from early users as we continue to build even smarter, faster, and more actionable PR feedback for AI-accelerated coding.

Have any questions, feedback, or ideas you’d like to share with us? We love hearing from our community, so please don't hesitate to drop us a message.

.svg)