Docker is a pretty awesome tool, I hope you’ll agree. With it, we can create environments for development, staging, production, and testing — or anything else — with next to no effort.

What’s more, using Docker makes building environments far quicker than it ever was before. Given that, plus a range of other benefits, it’s no wonder Docker’s gained such significant traction and popularity in recent years.

However Docker, like any technology, isn’t perfect. Moreover, one glaring way in which it isn’t is the size that Docker images can quickly grow to become. This point was driven home to me a month or so ago when I stopped to wonder where a significant chunk of my Mac’s drive space had gone.

Do you have unused #Docker images lying around? Use „docker system prune“ to clean them up. I just reclaimed 41GB.

— Matthew Setter 🇦🇺 (@settermjd) October 12, 2017

I ended up reclaiming 100GB.

— Matthew Setter 🇦🇺 (@settermjd) October 13, 2017

After a quick bit of searching, I found that over 100 GB — that’s right 100 GB! — was being used by Docker images. To be fair, I have been doing quite a lot of experimenting with Docker while I’ve been learning about it. So it should come as no surprise that I’ve built a lot of images which consume quite a bit of space. However, that they took up this much blew my mind!

That’s Why You Should Slim Down Docker Images?

On a local development machine, it might be okay to consume significant hard drive space. After all, if it takes a while you can always grab another coffee or tea, take a break from the screen to rest your eyes, grab some lunch, or discuss something with a colleague.

However, what about when deploying to testing, staging, and production? Or what if you’re pulling down large images to form the base of your Docker images or clusters in your CI/CD pipeline?

While bandwidth and disk space is cheap, time isn’t. If you’re not careful, you’ll be wasting precious (read: expensive) time waiting for images to download as you build and re-build your images and push your deployments.

So today I’m going to step you through five ways in which you can slim down your Docker images, so that you’re spending as little time waiting, and on bandwidth and disc space, as possible.

How Do You Slim Down Docker Images?

That’s a good question. Gladly, the answer isn’t too complicated. I watched a talk from Codeship a little while ago, which advocates several steps, which form the basis of the following five steps. Let’s step through them.

One: Think Carefully About Your Application’s Needs

Do you genuinely think about the application that you’re deploying? Do you fully consider the language, framework, extensions, tools, and third-party packages which it needs? Or do you install everything, such as development tools and Linux headers, before installing your application?

Bear in mind that everything that you install increases an image’s size, as you’d expect. While it may be easier to work this way (some may argue that it is quick and efficient) is it useful — over the long term?

Alternatively, if you stop and consider your application’s needs in-depth, you may be surprised as to just how little it may need. Sure, you don’t get the thrill of jumping in straight-away and building something. Moreover, you might even see planning as somewhat dull and tedious.

However, remember the old saying: “Proper Planning Prevents Poor Performance”. It’s an adage well worth remembering!

Two: Use a Small Base Image

What base image are you using? For quite some time, the default Docker image used Ubuntu, which made sense for several reasons. Let’s consider two of the biggest.

Firstly, Ubuntu’s based on Debian, which uses the Apt package manager. Apt is one of the most well thought out and most feature-rich package managers available. What’s more, its interface is about as intuitive as you can get.

Secondly, Ubuntu is one of the most popular Linux distributions, so its conventions are well understood by a large percentage of developers and systems administrators.

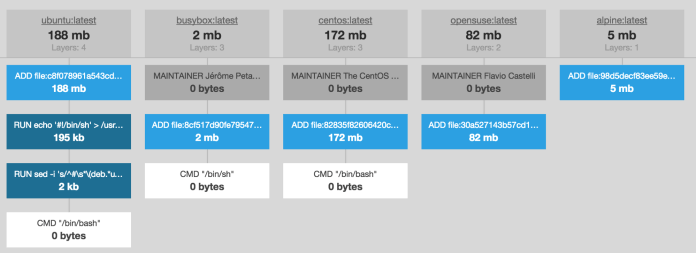

That said, when using Ubuntu as a foundation, your base image won’t be particularly small. Weighing in at around 188 MB, Ubuntu comes packed with most of the tools that you’re likely to need, even if you don’t need them. It’s an excellent example of size being sacrificed for ease of use.

There’s nothing, necessarily, wrong with that, but if you want as small an image as possible, you’re going to have to use a different base image. Luckily, one is readily available and is the new default for Docker images; it’s called Alpine Linux.

Alpine Linux is:

a small Linux distribution based on musl and BusyBox, primarily designed for “power users who appreciate security, simplicity and resource efficiency.

As a result, an image based on Alpine Linux is typically around 5 MB in size. That’s a size saving of around 97%!

Compare that to Ubuntu’s size and you can see how making this one change alone is a significant saving. To make it easier, here’s an image size comparison, from Brian Christner:

However, changing the default base image isn’t without some cost. The first is that Alpine Linux doesn’t provide much functionality out of the box. As a result, you’re going to have to better understand what your image needs and the dependencies upon which it relies.

The second is that Alpine uses APK instead of Apt. APK, while a little different from Apt, follows similar conventions to other Linux package managers, such as Yum, ZYpp, and Portage. While I’ve been using Apt for over ten years, transitioning to APK wasn’t that difficult, as its commands are pretty intuitive.

That said, if you have scripts for building images, you’ll have to refactor them to use APK commands and package names instead, which will take a little bit of time. However, it should be a, relatively, straight-forward process.

Three: Use as Few Layers As Possible

How many layers compose your Docker image? Layers?, you may be asking. If you’re not familiar, every Docker image is composed of several layers, one for each command in a Dockerfile (and those in its base image).

FROM php:7.0-apache

COPY ./ /var/www/html/

RUN chown -R www-data:www-data /var/www/html \ && chown www-data:www-data /var/www RUN apt-get -y update RUN apt-get install -y libmcrypt-dev libzip-dev libpng12-dev libicu-dev libxml2-dev wget build-essential RUN apt-get install -y git vim RUN apt-get install -y npm nodejs-legacy

In the example Dockerfile above, the resulting Docker image will be composed of 7 layers, in addition to any layers in the base image. Building Docker images in this way allows Docker to be as efficient as possible.

If a change is made in one layer, then only that layer needs to be changed. Every other layer can be fetched from cache.

That way, build times are as quick as possible.

To know how many layers your image is composed of, and how large they are, run the docker history command, as in the following example:

docker history ze-php7.1.11-alpine IMAGE CREATED CREATED BY SIZE COMMENT 5e0644e052e4 7 days ago /bin/sh -c #(nop) ENTRYPOINT [“/bootstrap… 0B 6791d155bd2e 7 days ago /bin/sh -c #(nop) EXPOSE 80/tcp 0B 6db0e80c9902 7 days ago /bin/sh -c #(nop) COPY dir:67f62ca0a16622f… 12MB b53ae8739f99 7 days ago /bin/sh -c chmod +x /bootstrap/start.sh 10.1kB 9fe7fa43babf 7 days ago /bin/sh -c #(nop) ADD file:9fef33a371bdaef… 10.1kB 70949f1e3e70 7 days ago /bin/sh -c mkdir /app && mkdir /app/public… 0B 969f39e455b7 7 days ago /bin/sh -c mkdir /run/apache2 && sed -… 17.8kB 6cfc7b7d4a34 7 days ago /bin/sh -c apk — no-cache update && apk … 70.6MB 946e205f9ff5 7 days ago /bin/sh -c echo “http://dl-cdn.alpinelinux… 149B 7bb295030e89 7 days ago /bin/sh -c #(nop) LABEL maintainer=Paul S… 0B 72dfe9e82e16 3 weeks ago /bin/sh -c #(nop) CMD [“/bin/sh”] 0B <missing> 3 weeks ago /bin/sh -c #(nop) ADD file:682bfba4bfda1ed… 4.15MB

There, you can see that it’s composed of twelve layers. For each layer you can see:

- The layer’s unique hash.

- When that layer was created.

- The command that created it.

- The layer’s size.

If you want to see an image’s combined size, use the docker imagescommand, as in this example:

docker images ze-php7.1.11-alpine REPOSITORY TAG IMAGE ID CREATED SIZE ze-php7.1.11-alpine latest 5e0644e052e4 7 days ago 86.8MB

Then there’s the next aspect of how layers work. Referring again to the Dockerfile example above, notice the last three RUN commands. I’ve deliberately split up three calls to apt-get install to highlight this point.

It first installs the required development packages, which subsequent packages depend on. It then installs several system binaries. Finally, it installs the application’s software packages.

Working this way might make sense during development, as the Dockerfile’s easy to read and is ordered logically. However, while it might be logical, it will result in a larger image than if those steps were combined.

Moreover, gets even worse. Do you install packages in one RUN command only to remove them after a following RUN command? You may wonder what the problem is, as the packages are ultimately deleted. Well, no they’re not.

They’re deleted in a later layer — but they still exist in the one where they were installed! You see, anything that exists in one layer isn’t removed in a later one, it’s only covered over, so that following layers can’t see it, and that it appears as though it doesn’t exist.

It gets back to the point about caching that I spoke of earlier.

That’s why:

- It’s better not to install a package in the first place if you don’t need it.

- Don’t install a package in the first place if you can avoid it.

How we could do this? Well, here are four ways:

- Develop the Dockerfile in logically separated blocks, but compact it in the final version.

- Don’t create files if you don’t have to — use streams and pipes as much as possible.

- Remove unnecessary files, such as cache files, or don’t use the cache in the first place.

- If you have to install files, use the smallest one possible.

Let’s see some of those in practice:

# Add basics first RUN apk — no-cache update \ && apk upgrade \ && apk — no-cache add apache2 ca-certificates openntpd php7 php7-apache2 php7-sqlite3 php7-tokenizer \ && cp /usr/bin/php7 /usr/bin/php \ && rm -f /var/log/apache/*

Here, you can see that I’ve combined the cache update and upgrade commands with the add command, by doing so, it’s not downloaded any extra files to the filesystem. Then, those commands were combined with the cp and rm command, which took care of some housekeeping and cleared out any unnecessary files.

Do some experimenting and see how you can compact your Dockerfile configurations.

Four: Use .dockerignore files

Referring back to the earlier Dockerfile example again, remember that it copied everything in its context into the images /var/www/html directory, in the second command? Are all those files necessary? Could some of them have been avoided?

If so, and it’s likely the case that some could have been, then do so by using [a .dockerignore file. These files, as their name implies, instruct Docker that specific files can be ignored.

If you’re familiar with .gitignore files, then you’ll know what I mean. If not, have a look at the example below.

vendor .idea data mysql

Using this configuration Docker would not copy any files in the vendor, .idea, data, or mysql directories of my project, saving both time and space in the process. .dockerignore files are convenient, and I strongly encourage you to become a whiz with them.

Five: Squash Docker Images

The last tip I want to offer is on squashing Docker images.

The idea here is that after your image is created, you then flatten it as much as possible, using a tool such as docker-squash.

Docker-squash is a utility to squash multiple docker layers into one to create an image with fewer and smaller layers. It retains Dockerfile commands such as PORT, ENV, etc.. so that squashed images work the same as they were originally built. In addition, deleted files in later layers are actually purged from the image when squashed.

The idea is that your image is as small as possible so that when it’s transferred, it’s as quick as is possible. However, while the concept is quite appealing, I was never able to use it effectively.

Perhaps it’s something to do with running it on macOS versus on a Linux distribution, but despite leaving it running for over an hour on a small image, there seemed no end in sight as to when the process would complete.

Given that, while using docker-squash might drastically reduce my Docker image sizes, saving precious time pulling down and pushing up images, this time saving seems outweighed by the time to squash the image in the first place.

However, your mileage may vary, and I’m entirely new to the tool. So if you’ve found success with it, or a related tool, please share your thoughts in the comments.

In Conclusion

Those were five steps that you can take to reduce the size of your Docker images. One or a combination of them will work Since some reduce image sizes more than others, always compare the size before and after using each one.

Also, the size reductions of each step is heavily dependent on some of the others and what your image needs to do.

With that said, I encourage you to foster a spirit of healthy competition in seeing just how small, just how compact your Docker images can become.

.svg)

-4.png?width=973&height=464&name=image%20(2)-4.png)