Code quality beyond bug fixing: we did a live product AMA 🎙

On November 16th, we did our first live product AMA, called Code quality beyond code fixing. In case you are wondering, AMA stands for Ask Me Anything, and it’s the perfect place for you to interact with us and bring all your questions, doubts, and concerns.

In this AMA, we discussed code quality with our engineers and product managers and answered several interesting questions from the audience! If you missed it live, fear not: you can (re)watch the recording below👇

Our code quality experts

During the entire AMA, we had a team of 5 amazing code quality experts who have been involved in developing the Codacy and Pulse platforms.

Interesting stats from your side

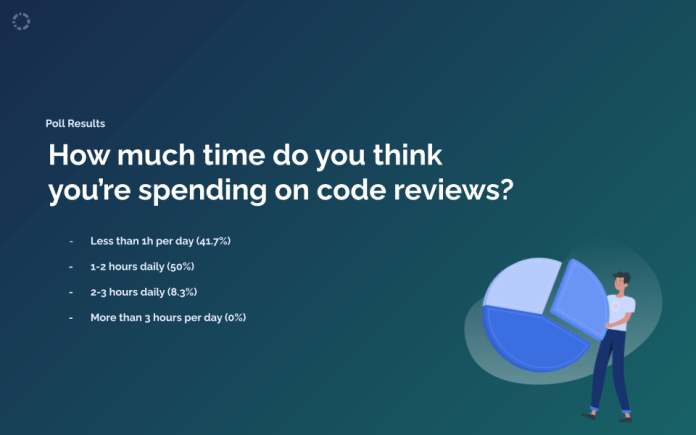

How much time do you think you’re spending on code reviews?

Precisely 50% of the audience said they spend 1-2 hours daily in code reviews, and more than 8% said they spend 2-3 hours daily! So see how Codacy can help you get back time to do what you do best.

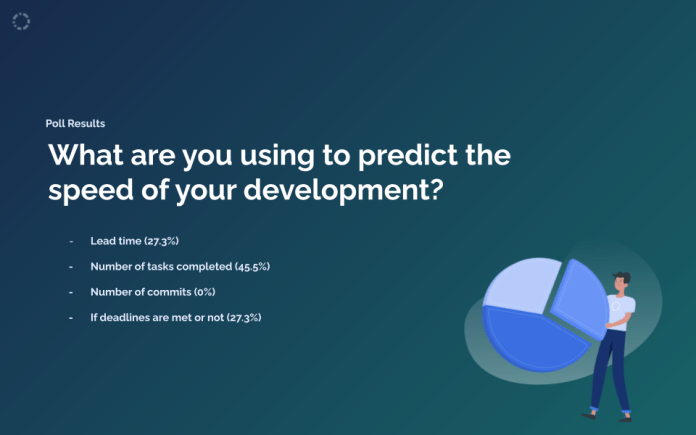

What are you using to predict the speed of your development?

With 45,5% of the answers, the majority of the audience said they used the number of tasks completed to predict the development speed. The remaining were equally divided into lead time and if deadlines are met.

Your questions, the stars of the show

After a short introduction to Codacy and Pulse, we opened the floor for all the questions the audience might have. You can check the detailed answers on our video recording of the AMA — we even give you the specific time frames! But we’ve summarized the answers for you to read 🤓

Is Pulse integrated with Codacy? (00:16:40)

At the moment, no, they are two different products. In Codacy, you get the quality overview, and in Pulse, you get the performance overview. But that’s something that we are still exploring!

How do you measure complexity in Codacy? (00:17:27)

We measure cyclomatic complexity, and in each language, we have a different tool that measures it. Simply put: we measure how complex your code is based on the loops and nests you have.

What actionable items do you recommend when the code quality goes down in a project? (00:18:36)

Your quality never drops when you start implementing a tool like Codacy 😎 because you’re catching things early on and not allowing new technical debt in. But if you do find your quality going down, you first need to figure out why. Then, see if people are taking more time to fix those issues, and here is where a tool like Pulse comes in handy 😏 Finally, try to prioritize it like you would prioritize product development.

How do you deal with false positives in the tool reports? (00:21:51)

It depends on what your false positives mean. Sometimes it might mean you are not configuring your analysis in the best way for your teams’ definition of quality. If it’s not that case, depending on the tool, you have annotations that you can do in code, and Codacy will run the same tool you have locally and ignore the false positives. You can also manage how you ignore issues. If you like further guidance, feel free to reach to us in our community or contact the support team directly in the in-app chat.

What is coverage in Codacy? (00:24:34)

If you have your tests and coverage set up on your pipeline as part of your workflow, you can upload the coverage report to Codacy. In Codacy, you can set up quality gates and specifically state the coverage cannot drop below a certain threshold, and Codacy informs you if it’s according to your standards.

How is Pulse gathering information to measure the metrics it provides? Does it need access to Jira or other types of management tools? (00:26:08)

Pulse integrates with a Git provider (currently only GitHub), extracts data, and calculates the metrics based on those data. You can also enrich it with information from incident tracking tools, like Pager Duty or Datadog. We are working on an integration in Jira and adding more detail to the metrics and dashboards we currently have!

What languages are supported in Codacy? (00:27:38)

Here’s a list of all the currently supported tools, both for front-end and back-end. We currently support 40+ languages, but we’re always trying to add more, so keep an eye on this list!

Are there specific features that static analysis tools commonly provide that you think are bad ideas or counter-productive? (00:29:30)

This was a juicy question; you’re going to want to head over to the video 👀 But in a nutshell, static analysis is what you make of it. The biggest mistake is using a cookie-cutter, one size fits all set of rules, and not reviewing it. You don’t want someone’s definition of quality. Instead, you should know what matters to you and your team, depending on the stage you’re currently in.

Do you support (financially, OSS contributions, etc.) the open-source tools you’re using? (00:36:53)

As a company, we don’t contribute back, but we’ve in the past had people individually giving back to those tools, in terms of OSS development. By the way, do you know that if you have an open-source project, Codacy is free? It’s true; go check it out!

How can Codacy insights and reports help decide which code quality problems we’ll tackle first? (00:42:37)

Currently, we’ve different dashboards, and you can, for example, have a good assessment of overall how do tests cover your repository code. You can also filter the issues found in your repositories by different categories and focus on the others that matter to you the most. In 2022 we’ll be focusing on the reporting and insights that we provide in Codacy! So we expect to have a much robust product next year.

What is your favorite feature for a static analysis tool that some but too few tools support? (00:47:30)

That’s an easy one: auto-fixes or suggestions of issue fixes! It removes a lot of work from developers, especially in those everyday issues that can be fixed automatically.

.svg)